What is Docker?

Docker is a platform for developers to develop, ship, and run applications using containers which allow developers to package an application with all of its dependencies into a standardised unit for easy deployment across different environments.

Why use Docker?

Docker offers several benefits, including:

Consistency: Containers ensure that an application runs consistently across different environments, from development to production. This container encapsulates the application code, runtime, libraries, and dependencies, ensuring that the application behaves consistently across different environments, such as development, testing, staging, and production.

Isolation: Containers provide a level of isolation for applications in which Each container has its own filesystem, processes, network interfaces, and resource limits, preventing conflicts between dependencies. Isolation also enhances security by limiting the impact of potential vulnerabilities and reducing the attack surface.

Efficiency: Containers are lightweight and share the host OS kernel, leading to faster startup times and reduced overhead compared to traditional virtual machines. This efficiency allows organisations to maximise resource utilisation and optimise infrastructure costs, especially in large-scale deployments.

Scalability: Docker makes it easy to scale applications by quickly spinning up additional instances of containers to handle increased workloads, users & User’s Requests. Due to be Lightweight and Portable, it makes it easy to deploy ad manage multiple instances of an app across various or distributed environment like, On-Permises Data Centres, Public Cloud Platforms and Hybrid Infrastructures. Docker's built-in orchestration tools, such as DockerSwarm and Kubernetes, further streamline the management of containerised applications and provide automated scaling capabilities, ensuring optimal performance and availability.

How does it Work?

Containerisation vs Virtualisation:-

Docker uses containerisation, which is a lightweight form of virtualisation. Instead of virtualising the entire operating system, containers virtualise at the application level, allowing for faster startup times and more efficient resource usage.

Virtualisation:-

Virtualisation allows you to run multiple operating system on a single physical / hardware machine which we know as Hypervisor. Hypervisor creates Virtual Machines (VM) on a host Operating System which emulates a complete Hardware Environment, which also includes Virtualised Hardware like CPU, Memory, Storage, Network Interfaces. Which let you to run different OS and applications independently within their own isolated VMs on the same physical server.

- Example: Imagine you have a single physical computer (server) running a hypervisor software like VMware or VirtualBox. You can create multiple virtual machines (VMs) on this server, each running its own operating system (e.g., Windows, Linux) and applications.

Containerisation:-

A Lightweight form of Virtualisation which let you run multiple isolated application (containers) on a single host OS is known as Containerisation. Containers virtualise only at the application level rather virtualise the entire operating system like Virtual Machine. It’s having it’s own Isolated filesystem, Processes, and NetworkStack and also shares the same host OS Kernel. Which makes Containers more portable, lightweight and efficient compared to traditional Virtual Machines.

- Example: Imagine you have a single physical computer (server) running Docker. You can create multiple containers on this server, each containing a specific application and its dependencies. These containers share the same host operating system kernel but are isolated from each other, allowing them to run independently and efficiently.

Docker’s Architecture:-

Docker Runtime:-

Docker Runtime is a Software Layer which responsible for START & STOP the Containers. It’s a Crucial Component of the Docker.

Runtime is of 2 types:-

run c(Low Level Runtime) — It works with the Operating System to Start & Stop the Containers.containerd(Hight Level Runtime) — It is responsible to managerun c, and to Implement CRUD operation in Docker.Containers relies on the CRI (Container Runtime Interface) to interact with different containers runtime. The CRI is a standard interface that defines how container runtimes communicate with container orchestration systems like Kubernetes. This allows Docker to work seamlessly with other container runtimes that implement the CRI, such as

containerd,CRI-O, and others.

Docker Engine:-

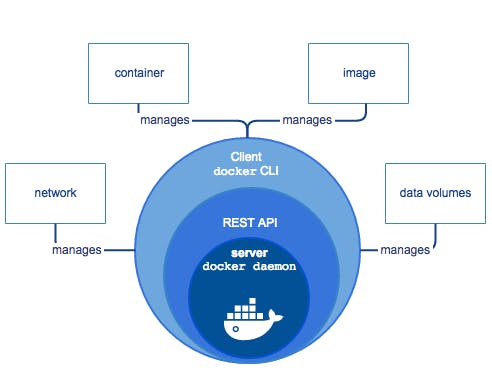

Docker Engine is the combination of

Docker Daemon (dockerd)

Docker Client (docker)

these component forms the core of Docker which lets users to Build, Ship and run Containerised Application.

Docker Daemon :-

Docker Daemon is responsible for managing Docker objects likes — images, containers, networks and volume and it also Handles Client / APU request made through Docker Client.

Docker Client :-

The Docker Client is the primary user interface to Docker. Through the Docker Client, users can interact with Docker. It provides a command-line interface (CLI) that allows users to run interactive commands to create, run, monitor, stop, and manage Docker containers, images, networks, and volumes. The Docker Client communicates with the Docker Daemon, which does the heavy lifting of building, running, and managing Docker containers. The Docker Client and Daemon can run on the same host, or they can communicate over a network.

Docker Registry :-

Docker Registry is a server-side application that stores Docker images. It allows users to push and pull images for use in their applications. Docker provides a public registry known as Docker Hub, where users can upload and download images. Users can also set up their own private registries for internal use.

Docker Orchestration :-

It is the process of managing, deploying and scaling the containerised apps across a cluster of Docker Hosts. It’s hard do things manually like container deployment, scheduling, loadbalancing, servicediscovery and resource management, so it automates these tasks to ensure the efficient and reliable operation of containerised applications in production environment.

There are several orchestration tool and frameworks that simplifies the dealing with containerised application and infrastructure provide by Docker.

2 commonly used Docker orchestration tools are Docker Swarm and Kubernetes.

Docker Swarm :-

Docker Swarm is a tool that allows IT administrators and developers to create and manage a swarm of Docker nodes. It's a native clustering and scheduling tool for Docker-based applications. With Docker Swarm, IT administrators and developers can establish and manage a cluster of Docker nodes as a single virtual system. Docker Swarm mode also allows users to increase the scale of Docker deployment, defined networks and mount points of services, update the services, and balance the load.

Key features of Docker Swarm include:

Service Discovery: Docker Swarm provides built-in service discovery for services deployed in the swarm. This means that services can communicate with each other by using service names instead of IP addresses.

Load Balancing: Docker Swarm has built-in load balancing features. It can distribute service tasks evenly among nodes in the swarm.

Scaling: Docker Swarm allows you to scale your applications by increasing or decreasing the number of replicas of services based on the load.

Rolling Updates and Rollbacks: Docker Swarm supports rolling updates, allowing you to update a service gradually without downtime. It also supports rollbacks, allowing you to revert to a previous version of a service if necessary.

Secure: Docker Swarm uses Transport Layer Security (TLS) for secure communication between nodes in the swarm. It also supports secrets management, allowing you to securely store and manage sensitive data, such as passwords and API keys.

High Availability: Docker Swarm ensures high availability of services. If a node fails, Docker Swarm schedules the node's tasks on other nodes.

Declarative Service Model: Docker Swarm uses a declarative model to define the desired state of services. This means that you only need to specify what you want to achieve, and Docker Swarm takes care of how to achieve it.

Fault Tolerance: If a node in a swarm fails, Docker Swarm can automatically assign its tasks to other nodes to ensure the continued availability of services.

Kubernetes:-

Kubernetes is an open-source platform designed to automate deploying, scaling, and operating application containers. With Kubernetes, you can quickly and efficiently respond to customer demand:

Deploy your applications quickly and predictably.

Scale your applications on the fly.

Seamlessly roll out new features.

Optimise use of your hardware by using only the resources you need.

Kubernetes is:

Portable: public, private, hybrid, multi-cloud

Extensible: modular, pluggable, hook-able, composable

Self-healing: auto-placement, auto-restart, auto-replication, auto-scaling

With Kubernetes, you are able to quickly and efficiently respond to customer demand:

Deploy quickly and predictably

Scale on the fly

Roll out new features seamlessly

Limit hardware usage to required resources only

Kubernetes provides several key features that complement Docker's containerisation capabilities:

Automatic Bin-packing: Kubernetes automatically schedules the deployment of containers based on resource usage and constraints, without sacrificing availability. This ensures optimal utilisation of resources.

Service Discovery and Load Balancing: Kubernetes can expose a container using the DNS name or their own IP address. If traffic to a container is high, Kubernetes is able to balance and distribute the network traffic to stabilise the deployment.

Storage Orchestration: Kubernetes allows users to automatically mount a storage system of their choice, such as local storages, public cloud providers, and more.

Self-healing: Kubernetes can replace and reschedule containers when nodes die. It also kills containers that don’t respond to user-defined health checks, and doesn't advertise them to clients until they are ready to serve.

Secret and Configuration Management: Kubernetes lets you store and manage sensitive information, such as passwords, OAuth tokens, and SSH keys. It can update and change application configuration without rebuilding the container images and without exposing secrets in the stack configuration.

Horizontal Scaling: With Kubernetes, applications can be scaled manually or automatically based on CPU usage.

Automated Rollouts and Rollbacks: Kubernetes progressively rolls out changes to your application or its configuration, while monitoring application health to ensure it doesn't kill all your instances at the same time. If something goes wrong, Kubernetes will rollback the change for you.

Batch Execution: In addition to services, Kubernetes can manage your batch and CI workloads, thus replacing containers that fail, if desired.

These features make Kubernetes a powerful platform for managing and orchestrating Docker containers at scale.

Docker-file :-

A Docker-file is a text file that contains instructions for how to build a Docker image. These instructions include identification of an existing Docker image to be used as a base, commands to be run during the image creation process, and a definition of the executable that should be run when a container is launched from the image. Here's an example:

FROM ubuntu

MAINTAINER yash <yash@gmail.com>

RUN apt-get update

CMD [“echo”, “Hello World”]

Docker Image:-

A Docker image is a lightweight, stand-alone, executable package that includes everything needed to run a piece of software, including the code, a runtime, libraries, environment variables, and config files.

Docker images are built from Docker-files, which are scripts containing a series of instructions that Docker uses to build the image and by single image can be used to create multiple containers. Docker images can be distributed and run on any system that has Docker installed, which makes them incredibly versatile for deploying software across a range of environments and platforms.

$ docker pull ubuntu:18.04 (18.04 is tag/version (explained below))

$ docker images (Lists Docker Images)

$ docker run image (creates a container out of an image)

$ docker rmi image (deletes a Docker Image if no container is using it)

$ docker rmi $(docker images -q) (deletes all Docker images)

Key-points about Docker Images:-

Docker Images are read-only files and cannot be modified after its creation due to it Immutable nature.

Docker images are built in layered file system, where each layer represents a set of changes to the filesystem. This allows for efficient image sharing and incremental updates.

Docker images pull layer caching to speed up the build process. Docker reuses layers from previously built images if they have not changed, which reduces build times and improves efficiency.

Docker images are stored and managed in Docker registries, such as Docker Hub for eg:- Google Container Registry, and Amazon Elastic Container Registry. Registries allow users to share, distribute, and pull images from a centralised location.

Each layers receives an ID which is calculated via SHA 256 hash of the layer contents and the last layer is use to write data out. The SHA 256 hash Changes when the contents of the layer changes.

$ docker images REPOSITORY TAG IMAGE ID CREATED SIZE mongo latest 35e5c11c442d 4 days ago 721MB ubuntu latest a50ab9f16797 2 weeks ago 69.2MB hello-world latest ee301c921b8a 10 months ago 9.14k- You can see that the first 12 characters of the

hashis equal to theIMAGE ID.

- You can see that the first 12 characters of the

docker images -q --no-trunc

sha256:35e5c11c442d7945ae38f403a0a7b6446f9728c550f9d60e0ee24087afbdd443

sha256:a50ab9f167975489853cbffd2be3bcadab3a9da27faf390ac48603c60d5c59e7

sha256:ee301c921b8aadc002973b2e0c3da17d701dcd994b606769a7e6eaa100b81d44Containers:-

Containers:-

Docker Containers are really Lightweight and Portable because of this you can move them around, share them with others and run it on different systems without any chaos. Think like a Box (Virtual Box) that contains everything you need to run a specific program or application. It contains the Program itself and like files, settings and tools. These boxes can be connected to more networks, attach storage and create a new images based on it’s current state. We will loose our DATA by DELETING containers.

Now to manage and applying CRUD Operations on these Boxes easily, we use Docker (Docker API or CLI). It let you package up your programs and everything it need into a container.

Docker Commands:-

docker pull <image>:

This command downloads a Docker image from a Docker registry (like Docker Hub) to your local machine.

docker build -t <tag> <path/to/Dockerfile>:

This command builds a Docker image from a Dockerfile. You specify a tag (name) for the image and the path to the Dockerfile.

docker run <image>:

This command runs a Docker container based on a specific Docker image. If the image is not available locally, Docker will automatically pull it from a registry.

docker ps:

This command lists the currently running Docker containers on your system.

docker ps -a:

This command lists all Docker containers on your system, including those that are not currently running.

docker stop <container>:

This command stops a running Docker container. You specify the container's ID or name.

docker rm <container>:

This command removes a Docker container from your system. You specify the container's ID or name.

docker images:

This command lists all Docker images that are available locally on your system.

docker rmi <image>:

This command removes a Docker image from your system. You specify the image's ID or name.

docker exec -it <container> <command>:

This command allows you to execute a command inside a running Docker container. You specify the container's ID or name, followed by the command you want to run.

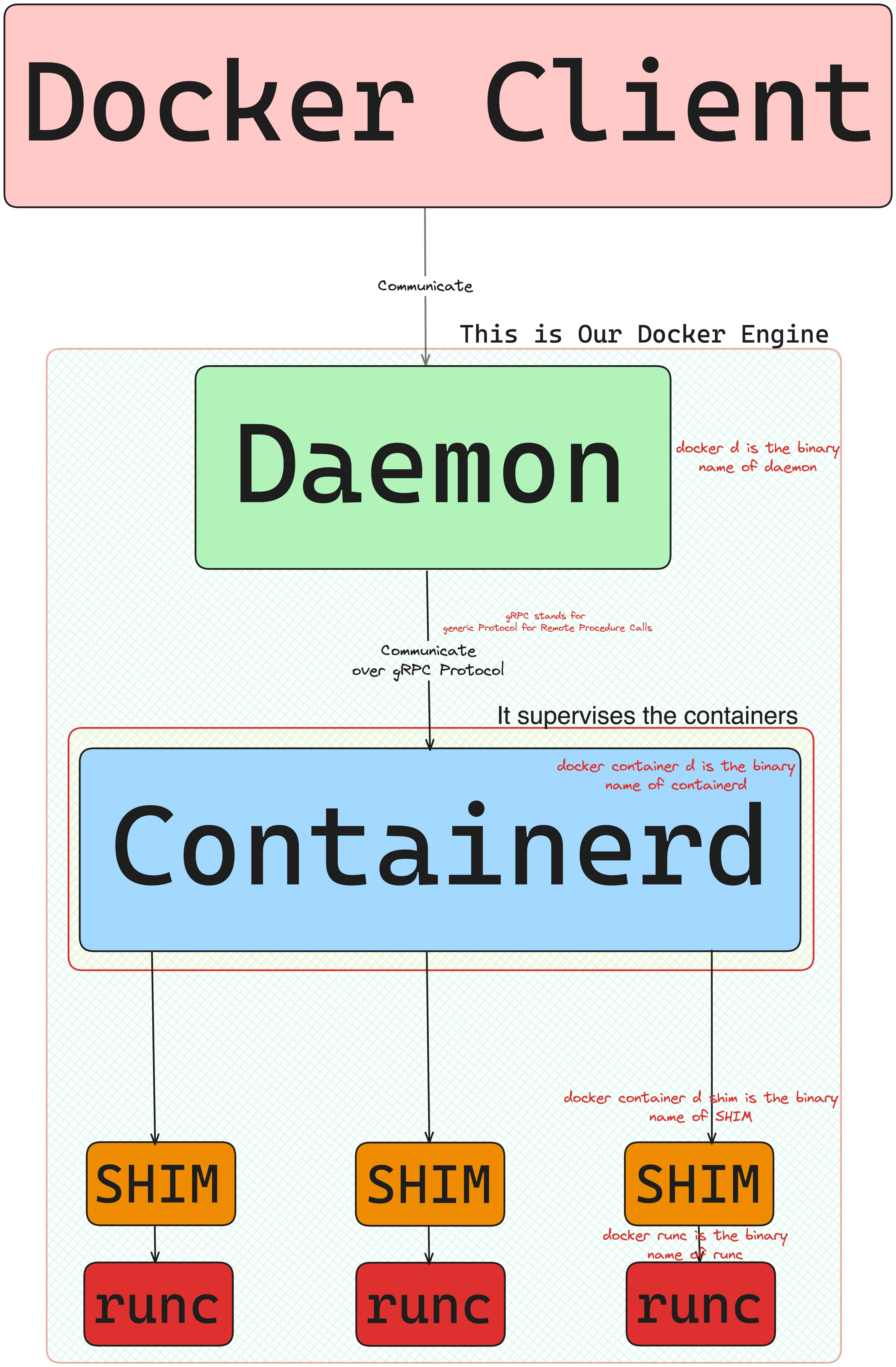

Architecture of Docker Engine:-

Before Docker & Docker Daemon there was LXC (LinuX Container) which wasn’t efficient for Scaling because of it’s Monolithic Architecture. Now OCI (Open Container Initiative) came into this picture set some rules about Docker and Runtime so we can work with that efficiently where they added containerd and runc

Docker Client = It is a CLI (Command Line Tool) which used to interact with the Docker Daemon.

Daemon = Previously Daemon has all the logics and rules for containers because of that if daemon stops running then the containers that are currently running they’ll also be stopped, which will have bad impact on our whole application. That’s where containerd & SHIM came into the picture. Once the container is created runc will be removed, now to interact with the container SHIM used as a bridge to interact with the containers. These container knows as Daemon Less Container.

containerd (previously it was Docker’s Project but now its CNCF project currently) = It use to manage the runc and everything like Networking and Pulling-Pushing Images containerd helps us to do that. It is also got used in Kubernetes. containerd does not create containers. It is runc that creates & execute those containers.

SHIM = It’s a bridge between the containerd and runc which ensure that the container process are still manage and isolated from the docker daemon and the other containers.

runc = Runtime which allows us to Start & Stop the containers at which Low Level.

Looking for a handy guide to Docker CLI commands? You're in luck!

Docker CLI is your go-to tool for managing Docker installations. From starting containers to managing configurations, it's essential for Docker operations.

In the given blog, It's curated a cheat sheet of key Docker commands for easy reference. Whether you're learning Docker or need quick command info, this list has you covered.

Here's a sneak peek:

General Commands: Get started with basics like displaying Docker info or accessing help.

Build Images: Learn to build images from Dockerfiles with various options.

Run Containers: Discover commands for running containers, including options for naming, networking, and more.

Manage Containers: Once containers are running, manage them efficiently with commands like pausing, stopping, or removing.

Copy to and From Containers: Easily move files between containers and your host machine using docker cp.

Execute Commands in Containers: Run commands inside containers with docker exec for easy management.

Access Container Logs: View and follow container logs to monitor processes.

View Container Resource Utilization: Check container resource usage with docker stats for better performance monitoring.

Manage Images: Handle images effectively by listing, tagging, pulling, and pushing them as needed.

Manage Networks: Administer Docker networks on your host with creation, connection, and removal commands.

Manage Volumes: Control storage volumes with creation, listing, and deletion commands.

Use Configuration Contexts: Simplify Docker daemon connections with configuration contexts.

Create SBOMs: Generate Software Bill of Materials (SBOMs) for container images for better visibility.

Scan for Vulnerabilities: Scan images for vulnerabilities using Docker's built-in scanner powered by Snyk.

Docker Hub Account: Interact with your Docker Hub account through login, logout, and search commands.

Clean Up Unused Resources: Keep your Docker environment tidy with commands to prune unused data.

For detailed instructions and more Docker insights, visit the given blog. Plus, explore how Spacelift simplifies integration with third-party tools and Docker images for smoother workflows.

Ready to master Docker CLI? Dive into the cheat sheet and level up your Docker game today!

Explore the curated Docker CLI cheat sheet and level up your Docker game!